Artificial Intelligence (AI) & Machine Learnings (ML)

Artificial Intelligence (AI) and Machine Learning (ML) have evolved from theoretical concepts into transformative technologies that now shape industries globally. While these technologies have existed for decades, it wasn’t until recently that their impact became truly transformative, thanks in part to breakthroughs by tech giants like Google. For many years, Google was at the forefront of AI innovation, from its pioneering research in deep learning to the introduction of game-changing tools like TensorFlow and Google Brain. However, with the rise of companies like OpenAI, the landscape has shifted, and the pace of innovation has accelerated. This paper explores the history, development, and applications of AI and ML, highlighting the shifting dynamics in the industry and examining their potential to revolutionize everything from healthcare to creative arts.

What is Artificial Intelligence (AI)?

AI is a field of computer science focused on creating machines that can perform tasks that typically require human intelligence. These tasks include:

Reasoning: The ability to make decisions based on available data.

Learning: AI systems can improve over time by learning from experience, much like humans do.

Perception: AI can interpret sensory input, like images or sounds, and make sense of it.

Decision-Making: AI can choose actions based on the data it has analyzed, similar to how humans make decisions.

AI also includes subfields like Natural Language Processing (NLP), robotics, and computer vision, which focus on specialized tasks like understanding language, controlling robots, and interpreting images, respectively.

Key Types of AI

Artificial Narrow Intelligence (ANI)

Definition: AI focused on one task, often referred to as "weak AI."

Examples: Virtual assistants (e.g., Siri, Alexa), recommendation systems, and spam filters.

Artificial General Intelligence (AGI)

Definition: Hypothetical AI capable of performing any intellectual task a human can do.

Status: Still a concept, the pursuit of AGI is a long-term goal for AI researchers.

Artificial Superintelligence (ASI)

Definition: AI that surpasses human intelligence in every domain, including creativity and emotional intelligence.

Implications: Raises ethical questions and is often a topic of discussion in both science fiction and philosophical debates.

What is Natural Language Processing (NLP)?

NLP is a specific area within AI that enables machines to understand, interpret, and generate human language. Whether it’s reading a document, translating a text, or holding a conversation, NLP helps AI systems process language in a way that’s similar to how humans communicate. NLP is used in applications like:

Chatbots (like Siri or Alexa)

Language translation services (such as Google Translate)

Text analysis tools (which can read and summarize documents)

What is Machine Learning (ML)?

Machine Learning (ML) is a subset of AI where machines can learn from data. Rather than being explicitly programmed with hard-coded instructions, ML systems analyze patterns in the data and use that information to make predictions or decisions. As they process more data, they continue to improve.

Some examples of ML include:

Fraud detection: Analyzing financial transactions to spot suspicious activity.

Recommendation engines: Suggesting products based on your browsing history (think Amazon or Netflix).

Autonomous driving: Cars that learn to drive by observing road patterns and adapting to different situations.

Key Machine Learning (ML) Techniques

Supervised Learning

Models are trained on labeled data with known outcomes. Examples: image classification, credit scoring, fraud detection.

Unsupervised Learning

Models analyze data without labeled outcomes, identifying patterns. Examples: customer segmentation, market basket analysis.

Reinforcement Learning (RL)

AI learns through rewards and penalties based on interactions with its environment. Examples: game-playing AI (AlphaGo), robotics.

Deep Learning

A subset of ML using deep neural networks to process large datasets. Examples: image recognition, speech recognition, autonomous vehicles.

Generative AI and Large Language Models (LLMs)

Generative AI refers to AI systems that can create new content, like text, images, or music, by learning from existing data. This content can include:

Text generation (such as articles or poems)

Image creation (like DALL-E by OpenAI, which generates pictures from text descriptions)

Protein structure predictions (such as AlphaFold by DeepMind, which predicts how proteins fold)

Large Language Models (LLMs) are a specific type of generative AI that focuses on text. These models are trained using massive datasets to understand and generate human-like text. They use advanced architectures, like transformers, to process and generate text.

Some well-known LLMs include:

GPT (Generative Pre-trained Transformer), created by OpenAI, can write essays, answer questions, and hold conversations.

BERT (Bidirectional Encoder Representations from Transformers), developed by Google, helps understand the meaning of words in context, which is crucial for tasks like search and language translation.

History of AI and ML

The Beginnings (1950s–1970s)

1950: Alan Turing proposed the Turing Test, a method to measure a machine's ability to exhibit intelligent behavior.

1956: The term "Artificial Intelligence" was officially coined during the Dartmouth Conference.

Early AI systems focused on symbolic reasoning, using predefined rules to solve problems.

The AI Winters (1970s–1990s)

Progress slowed due to limited computational power and funding challenges.

AI research shifted towards expert systems during this time, but success was mixed.

Resurgence and Growth (1990s–2010s)

Advancements in computational power, data storage, and the internet fueled the resurgence of AI research.

1997: IBM’s Deep Blue defeated world chess champion Garry Kasparov, marking a major milestone in AI.

2012: The rise of deep learning, particularly through models like AlexNet, revolutionized AI, especially in image recognition.

The Modern Era (2010s–Present)

The 2010s saw AI and ML experience a renaissance, driven by the convergence of big data, powerful computational resources, and advanced algorithms. Leading companies like Google and OpenAI played crucial roles in mainstreaming these technologies.

Google’s Breakthroughs:

Deep Learning & Neural Networks: Google's Google Brain project (founded in 2011) advanced deep learning, enhancing image recognition and speech processing.

2012: Google Brain's team, led by Geoffrey Hinton, made a breakthrough by training deep neural networks to recognize images, dramatically reducing error rates in image classification.

2014: Google acquired DeepMind, which later developed AlphaGo, an AI that defeated the world champion of the game Go in 2016. This achievement highlighted AI’s capacity to solve complex problems that once required human intuition.

2017: Google released TensorFlow, an open-source deep learning library, which democratized AI research and development, enabling easy integration of AI into various products and services.

2018: Google unveiled BERT (Bidirectional Encoder Representations from Transformers), revolutionizing Natural Language Processing (NLP) by improving tasks like translation and sentiment analysis.

OpenAI’s Emergence:

Founded in 2015 by Elon Musk, Sam Altman, and others, OpenAI set out with the mission to ensure that artificial general intelligence (AGI) benefits humanity.

2018: OpenAI introduced GPT-2, a language model capable of generating human-like text, sparking debates on the ethical implications of generative AI.

2020: OpenAI released GPT-3, a model with 175 billion parameters, pushing the boundaries of NLP with capabilities including writing essays, generating code, and answering complex queries.

2022: ChatGPT (based on GPT-3) was launched, propelling AI adoption in a wide range of sectors by focusing on conversational applications.

2023: OpenAI introduced GPT-4, offering advanced multimodal capabilities and enhanced reasoning abilities.

Google vs OpenAI (Microsoft)

Google and OpenAI are two of the leading companies driving advancements in artificial intelligence, but they differ in their approaches and business models. Google integrates AI into its vast array of products, such as search engines, YouTube, and cloud services, while also conducting cutting-edge research through its subsidiary, DeepMind. Its AI tools are focused on improving user experiences and business optimization. OpenAI, on the other hand, is focused on developing artificial general intelligence (AGI) that is safe, ethical, and beneficial for humanity. Through models like GPT and collaborations with Microsoft, OpenAI aims to provide powerful AI tools for a wide range of industries, including programming, customer service, and content generation. While Google’s AI is more embedded in its ecosystem, OpenAI is more externally focused, offering its AI capabilities through API services and maintaining a strong emphasis on AI governance.

BERT (Bidirectional Encoder Representations from Transformers) is a groundbreaking natural language processing (NLP) model developed by Google in 2018. It was designed to improve the understanding of language context by processing text bidirectionally, meaning it can consider the words before and after a given word in a sentence, rather than just looking at words sequentially from left to right or right to left. This allows BERT to understand the nuances and meanings of sentences much better than previous models, making it highly effective for tasks like search engine optimization, question answering, and language translation. BERT significantly improved Google's search algorithms, enabling the system to provide more relevant results based on natural language queries.

The "T" in GPT (Generative Pre-trained Transformer) stands for "Transformer," a model architecture introduced by Google in 2017 with their paper, "Attention is All You Need." It revolutionized natural language processing (NLP) and machine learning by introducing a new way of handling sequence data (like text) that relies entirely on a mechanism called self-attention, eliminating the need for recurrence or convolution. The Transformer’s ability to understand relationships between words over long distances in a sentence laid the foundation for later models like GPT, which adopted and refined these techniques for generative language tasks.

Google's work with the Transformer architecture directly influenced the development of models like GPT. Although GPT was introduced by OpenAI, it builds upon the Transformer framework pioneered by Google, adapting it for generative tasks (like creating coherent text) and pre-training on large datasets, which allows GPT models to perform a wide variety of tasks without task-specific fine-tuning.

GPT stands for Generative Pre-trained Transformer, which is a type of deep learning model used in natural language processing (NLP). It is designed to understand and generate human-like text based on input data. Here's a breakdown of what each part of the term means:

Generative: Refers to the model's ability to generate text, such as answering questions, completing sentences, or even creating entire paragraphs or articles, based on the input it receives.

Pre-trained: Indicates that the model is trained on a large corpus of text data before being fine-tuned for specific tasks. The pre-training helps the model learn patterns, grammar, context, and nuances of human language.

Transformer: This refers to the underlying architecture of the model. The Transformer architecture, introduced in a 2017 paper titled Attention is All You Need, is highly effective in handling sequential data and is the foundation for many state-of-the-art NLP models like GPT. It uses self-attention mechanisms to understand the relationships between words in a sentence, regardless of their position.

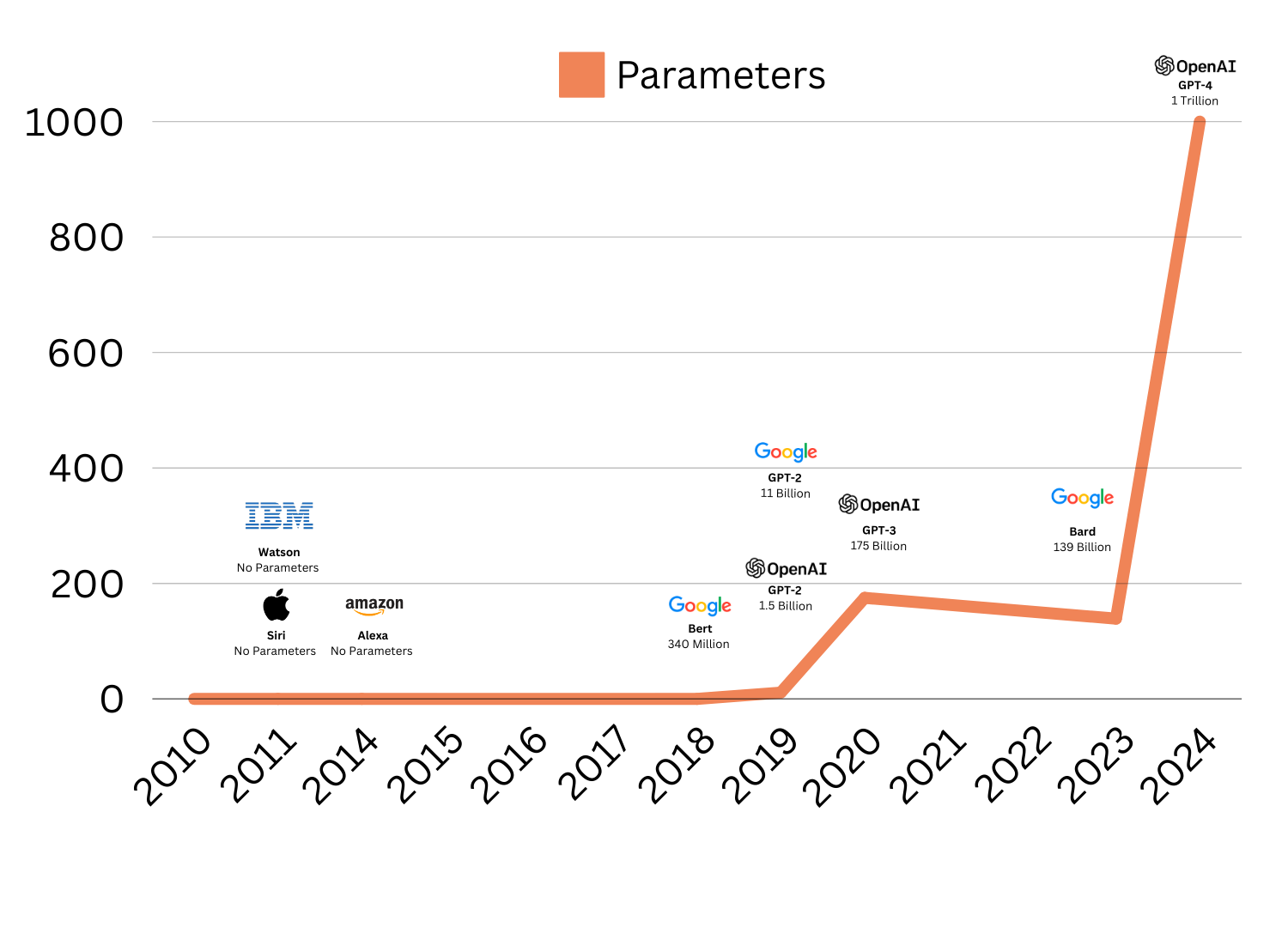

AI Models are rapidly scaling parameters

Parameters

In simple terms, parameters in Large Language Models (LLMs) are like the "settings" or "rules" the model learns while being trained. These settings help the model understand and generate human-like text. The more parameters a model has, the more complex and accurate it becomes. Early AI models had fewer parameters, but as technology advanced, models like GPT-3 and GPT-4 grew to billions of parameters, making them much more powerful. The increase in parameters allows these models to better understand context, generate smoother responses, and perform tasks like translation or summarization with greater accuracy.

AI Market: TAM, CAGR, and Growth Catalysts

Total Addressable Market (TAM):The global Artificial Intelligence (AI) market size was valued at approximately $200 billion in 2023 and is projected to reach $1.87 trillion by 2030, growing at a CAGR of 37.3% from 2023 to 2030 (Grand View Research), driven by widespread adoption across industries such as healthcare, finance, automotive, and retail. Generative AI, a sub-segment, is expected to grow from $13.71 billion in 2023 to over $109 billion by 2030, growing at a CAGR of 35.6% (IDC Blog) during the same period, reflecting its fast-evolving applications in language models, computer vision, and multi-modal systems

Key Growth Catalysts:

Industry-Specific Applications

Healthcare: AI for Diagnostics and Robotic Surgery

AI has made significant inroads into healthcare, improving diagnostics, patient care, and operational efficiency. Machine learning models are now used to assist in the early detection of diseases such as cancer, heart conditions, and neurological disorders by analyzing medical images with a level of precision that rivals or surpasses human experts. For example, AI-driven tools like IBM Watson Health have shown remarkable results in interpreting radiology images. Furthermore, AI algorithms are advancing in drug discovery, streamlining the process of identifying promising compounds and predicting their effectiveness.

In robotic surgery, AI-powered systems like those developed by Intuitive Surgical (maker of the da Vinci surgical system) are enabling minimally invasive procedures with greater precision and reduced recovery times. Robotic surgery platforms integrate machine learning to improve the accuracy of movements and provide real-time data analytics to enhance decision-making during procedures. These innovations are expected to significantly reduce healthcare costs and improve patient outcomes.

Finance: AI for Risk Management, Compliance, and Investment Analytics

AI is transforming finance by enabling more accurate predictions, improving operational efficiency, and ensuring better risk management. Machine learning algorithms are increasingly used to detect fraudulent transactions, optimize credit scoring, and manage market risk by analyzing vast amounts of data for patterns that would be too complex for traditional models.

For compliance, AI tools help financial institutions adhere to regulations by automating processes such as transaction monitoring and reporting suspicious activities. These systems can quickly identify discrepancies or unusual patterns that may indicate fraud or financial crimes, thereby reducing human error and improving security.

In investment analytics, AI is playing a crucial role in algorithmic trading and portfolio management. Machine learning models analyze market data, news sentiment, and financial reports to provide real-time trading recommendations and predictive insights. This has the potential to not only enhance profitability but also enable greater financial inclusivity by democratizing access to sophisticated investment tools.

Generative AI Expansion

Increased Demand for Large Language Models (LLMs)

Generative AI, particularly Large Language Models (LLMs) like GPT-4, has seen an explosion in demand across a wide range of industries. These models, which can generate human-like text, are now integral to customer service, content creation, and translation services. In content creation, LLMs are being used to draft articles, produce marketing copy, and generate product descriptions at scale. Their ability to create coherent, contextually aware, and creative text has led to their rapid adoption by companies looking to automate content generation, thus reducing costs and time-to-market.

In customer service, AI chatbots powered by LLMs are improving user experiences by providing 24/7 support that can handle complex inquiries, reducing wait times and freeing up human agents to deal with more nuanced issues. The ability of LLMs to understand and respond in natural language also allows for more personalized interactions with customers, leading to higher satisfaction and engagement.

In translation, LLMs like Google's BERT and OpenAI’s GPT models have revolutionized the way businesses approach global communication, enabling more accurate, nuanced translations in real time, without the need for extensive human oversight. This is particularly crucial as businesses expand into international markets and require scalable, effective language solutions.

Cloud Computing and AI-as-a-Service (AIaaS)

The integration of AI capabilities into cloud computing platforms has been a key factor in driving the AI market forward. Cloud service providers such as Amazon Web Services (AWS), Microsoft Azure, and Google Cloud have heavily invested in AI, offering machine learning services to businesses of all sizes. By utilizing cloud-based AI solutions, companies can access cutting-edge tools without the need for extensive in-house infrastructure or expertise, thereby lowering costs and accelerating AI adoption.

AI-as-a-Service (AIaaS) is democratizing access to advanced AI, providing smaller firms with tools that were once available only to large corporations. AIaaS allows businesses to integrate machine learning models for a variety of applications, such as predictive analytics, natural language processing, and image recognition, without needing deep technical know-how or the resources to build their own models. This cloud-based flexibility is expected to drive significant growth in sectors like retail, healthcare, and finance, where AI can offer a competitive edge.

Government Initiatives and Regulations

Government support for AI research and development is accelerating its deployment in various sectors. Countries like the United States, China, and the European Union have recognized the transformative potential of AI and are backing initiatives to lead in AI development. In the U.S., the National Artificial Intelligence Initiative Act (2020) aims to coordinate federal AI research efforts and maintain leadership in the field. Similarly, the European Union is focusing on AI ethics and responsible innovation, with frameworks like the Artificial Intelligence Act, which seeks to ensure the development and use of AI systems are ethical, transparent, and in line with human rights.

These regulatory frameworks are creating a balanced environment where AI can be harnessed for growth while mitigating risks such as data privacy concerns, bias, and misuse. Governments also fund AI-driven innovations in healthcare, manufacturing, and cybersecurity, providing both the incentive and resources for continued AI adoption.

Consumer Trends

The rise of AI has profoundly impacted consumer experiences across various industries, particularly in retail, advertising, and entertainment. AI technologies enable hyper-personalization, tailoring products and services to individual preferences and behaviors, thus improving customer satisfaction and driving sales.

In retail, AI is used to analyze consumer purchasing behavior, predict trends, and personalize shopping experiences through recommendation engines. Retail giants like Amazon use AI to suggest products based on customer history and preferences, creating a more customized shopping experience that increases conversion rates and customer loyalty.

In advertising, AI has transformed how businesses engage with potential customers. Machine learning models are used to optimize ad targeting, predicting consumer preferences based on browsing history, demographics, and even social media activity. This precision in targeting not only improves advertising ROI but also creates less intrusive, more relevant ads for consumers.

In entertainment, AI is changing how content is delivered and consumed. Streaming services like Netflix and Spotify use AI-driven recommendation systems to suggest content that aligns with viewers' tastes, enhancing user engagement and retention. Additionally, AI is increasingly used in content creation itself, from generating scripts to producing music, further expanding its influence on the entertainment industry.