Semiconductors

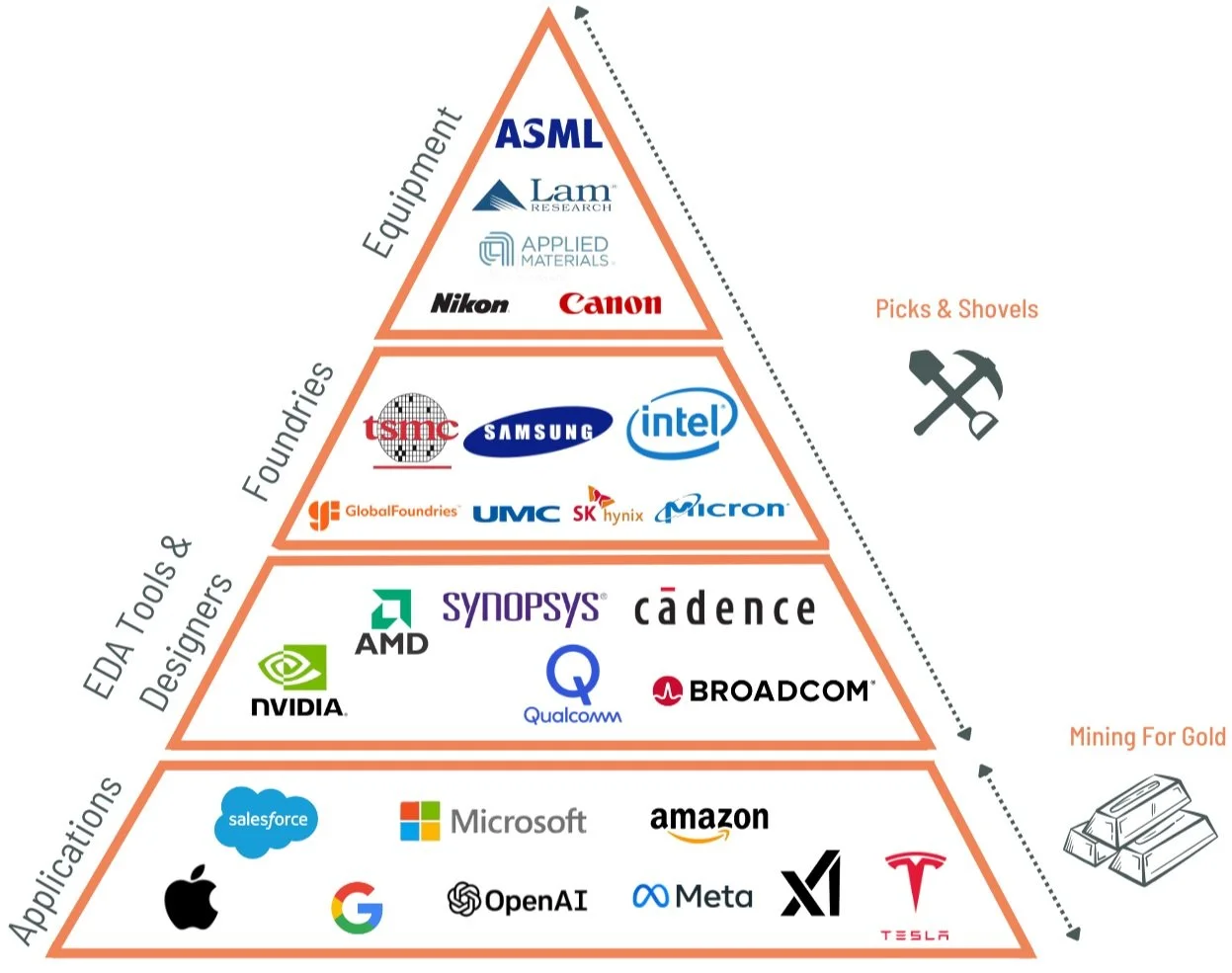

Semiconductors are tiny chips that power almost everything electronic, from phones to cars. They're like the tools ("picks and shovels") that make modern industries run, driving big tech shifts like Industry 3.0 (computers and automation) and Industry 4.0 (AI, robotics, and smart devices).

History

1947: The Birth of the Transistor

The invention of the transistor, considered one of the most transformative technologies of the 20th century, was accomplished at Bell Laboratories by John Bardeen, Walter Houser Brattain, and William Shockley. This innovation became the foundation of modern electronics. Many would argue that this was the birth of Industry 3.0, defined by the rise of technologies such as semiconductors, programmable logic controllers (PLCs), computer-aided design (CAD), and early automation systems that transformed manufacturing through digitalization and precision.

1952-1956: Proliferation of Semiconductor Technology

In 1952, Bell Labs began licensing its transistor manufacturing technology, laying the groundwork for companies like Texas Instruments, IBM, Motorola, and Sony to enter the field.

By 1956, Bell Labs formally introduced lithography, diffusion, and oxidation layer mask technology during its third sharing of transistor technology. This critical advancement fueled the growth of Fairchild Semiconductor, Intel, AMD, and other future industry leaders, spurring innovation in the lithography space.

1958: The First Integrated Circuit

The concept of integrating multiple transistors onto a single circuit was first proposed in 1952 by Geoffrey Drummer.

On August 28, 1958, Jack Kilby of Texas Instruments created the first functional integrated circuit (IC) by wire bonding three transistors together, marking the birth of what is now called a System-in-a-Package (SIP).

Robert Noyce of Fairchild Semiconductor merged Jean Hoerni’s planar process with the IC concept, introducing the modern IC.

1956-1968: The Rise of Silicon Valley

The story of Intel's founding is the same as how Silicon Valley (SV) got its name. William Shockley, co- inventor of the transistor, started a company called Shockley Semiconductor Labs in 1956. SV is derived from the Silicon used in transistors and chips. A year later, the so-called traitorous eight, quit to start Fairchild Semiconductor, which quickly became the world's premier chip company. A decade later, two of the founders of Fairchild, Bob Noyce and Gordon Moore, left to start Intel. Back then, short term memory (RAM) or central processing unit (CPU) did not exist, these are both Intel's innovations. Gordon Moore, co-founder of Intel, established Moore’s Law - "the number of transistors incorporated in a chip will approximately double every 24 months" and has held true even till this day.

1960s-1970s: The Foundations of Lithography

In 1961, GCA Medical Technology Inc. built the first lithography machine, and in the 1970s, Kasper Instruments Inc. built the first lithography machine. Because the threshold of lithography technology was relatively low at that time, in the late 1960s, Nikon and Canon of Japan, because of the similarity of their industries, also started to set foot in the market. lithography industry.

1980s: The Glory Years for Japan

By the 1980s, Nikon released its first commercial stepper lithograph and served a series of large customers such as IBM, Intel, and AMD. By 1984, Nikon was able to equal GCA and served Ultratech, Eaton, P&E, Canon, Hitachi and a few others. This was the year that that ASML (Advanced Semiconductor Materials Lithography), the future dominant player in the lithography industry, was founded as a joint venture with Philips. The 1980’s were the glory years for the Japanese semiconductor and photolithography industries. In the late 1980s, Nikon and Canon maintained a duopoly, while ASML held 10% of the market.

1990s: The Birth of EUV Research

In the 1990s, Researchers at several United States Department of Energy (DOE) national laboratories (specifically Livermore, Berkeley, and Sandia) were funded to perform basic research into the technical obstacles of EUV, The Extreme Ultraviolet Limited Liability Company (EUV LLC) was formed and succeeded in the technology. Intel, Canon and Nikon (leaders in the field at the time), as well as ASML and Silicon Valley Group (SVG) all sought licensing, however Congress denied Japanese companies the necessary permission as they were perceived as strong technical competitors at the time and should not benefit from taxpayer-funded research at the expense of American companies. In 2001 SVG was acquired by ASML, leaving only a single company as the sole benefactor of the critical technology.

2018: ASML’s EUV Breakthrough

By 2018, ASML succeeded in deploying the EUV-LLC IP after several decades of developmental research, with incorporation of European-funded EUCLIDES (Extreme UV Concept Lithography Development System) and long-standing partner German optics manufacturer ZEISS and synchrotron light source supplier Oxford Instruments. This dwarfed competitors Nikon and Canon as they were denied IP access.

Before EUV, chip makers had three companies they could choose from for their photolithography tools: Canon, Nikon and ASML. Nikon is still a competitor for DUV, but ASML is the only option for EUV. Experts say it could take decades for any another company to catch up, not only because of ASMLs proprietary tech, but because its bult complex often exclusive deals with nearly 800 suppliers.

The Technology

What is a Chip?

A microchip, also known as a chip, computer chip, or integrated circuit, is a small, flat piece of silicon that contains thousands or even billions of tiny electrical circuits. These circuits allow the chip to perform tasks like processing data and controlling devices.

The process of making a microchip begins with silicon wafers. Silicon is the material of choice because it is a semiconductor, meaning its ability to conduct electricity can be controlled. By adding small amounts of other materials like phosphorus or boron, the silicon can be adjusted to control electrical currents, essentially turning them on or off. This is what enables chips to perform calculations and execute tasks.

To create the chip, purified silicon is first melted and sliced into thin, round pieces called wafers. These wafers serve as the base for building the chips. Once the wafer is ready, transistors—the key components in a chip—are built up in layers. Each transistor is like a tiny switch that can turn electrical signals on and off. The more transistors a chip has, the more powerful it can be.

The process of adding these transistors and circuits to the chip involves lithography. This is where light is used to "print" the tiny patterns of the chip onto the wafer. In lithography, light is projected through a mask containing the chip design. The wafer is coated with a special light-sensitive chemical called photoresist. When the light hits the photoresist, it creates intricate patterns that form the circuits and transistors on the chip.

This process is repeated, adding many layers to build up the full chip, with each layer adding new circuits that allow the chip to perform its functions. The end result is a microchip that can power everything from smartphones and computers to cars and appliances.

How Microchips Relate to CPUs, GPUs

CPU (Central Processing Unit):

The CPU is like the "brain" of a computer. It handles all the basic tasks, like running software, doing math, and managing the flow of information. CPUs are found in almost every device, including computers, phones, and servers. They’re great for doing one thing at a time quickly, like following a to-do list step by step.

GPU (Graphics Processing Unit):

GPUs are specialised microchips designed to handle large-scale parallel processing - doing many tasks at the same time. Originally built for rendering graphics and images in gaming and visual applications, they are now widely used in fields like artificial intelligence (AI), machine learning, and scientific simulations.

GPUs can process thousands of operations simultaneously, making them highly efficient for tasks requiring massive data computation.

The Evolution of Lithography: DUV to EUV

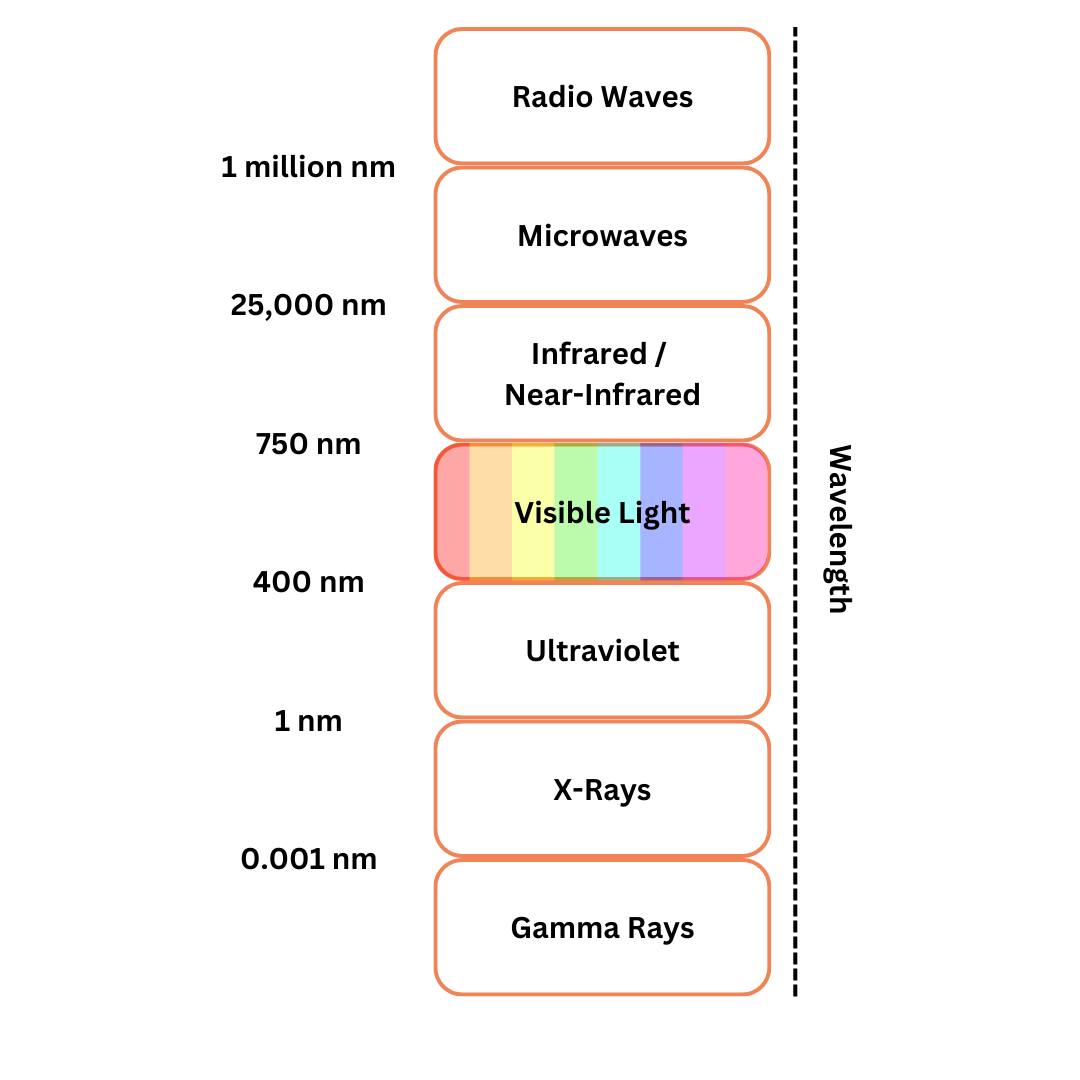

In the past, the light used for lithography was much bigger, and as the chips needed to get smaller, the light source also had to get smaller. Here’s how it progressed:

Visible Light (1960s-1980s)

In the early days of lithography, visible light was used. However, the wavelength of visible light was too large to produce the tiny features needed for modern microchips. This limited the size and performance of the chips that could be made.

DUV (Deep Ultraviolet) Lithography (1990s-2000s)

In the 1990s, the industry moved to DUV (Deep Ultraviolet) light sources, which had a much smaller wavelength of 248nm, and later 193nm. These shorter wavelengths allowed for the production of smaller transistors, which led to smaller, more powerful chips. With DUV technology, chips with transistors as small as 7nm or 5nm could be created, a significant leap forward.

EUV (Extreme Ultraviolet) Lithography (2010s-present)

The most recent advancement is EUV (Extreme Ultraviolet) lithography, which uses 13.5nm light. This is much smaller than the 193nm wavelength used in DUV machines, allowing for the production of even smaller transistors—down to 3nm or even 2nm. EUV has become essential for creating the latest-generation chips, offering increased speed, power efficiency, and performance.

Over time, lithography light sources have evolved significantly, starting with visible light in the 1960s, followed by the shift to DUV (Deep Ultraviolet) light in the 1990s, with wavelengths of 248nm and later 193nm. The next major leap was 193nm immersion lithography in the 2000s, which enhanced resolution by using liquid to focus light more precisely. Today, ASML's EUV (Extreme Ultraviolet) machines, utilizing 13.5nm light, represent the cutting edge of lithography, enabling the creation of the smallest, most powerful chips ever. These advancements have allowed ASML to dominate the high-end lithography market, leaving the infamous Japanese competitors like Nikon and Canon far behind.

This is where ASML, the world leader in lithography, plays a crucial role. Their Extreme Ultraviolet (EUV) lithography machines use light with a wavelength of 13.5 nm, significantly smaller than the 193 nm deep ultraviolet (DUV) used in previous generations. EUV light is so short it is invisible to the human eye and absorbed by natural materials, necessitating the process to be conducted in a vacuum. These machines are extraordinary feats of engineering, capable of producing 3,000 wafers per day, with hundreds of chips per wafer. Each chip can contain billions of transistors, thinner than a strand of human hair.

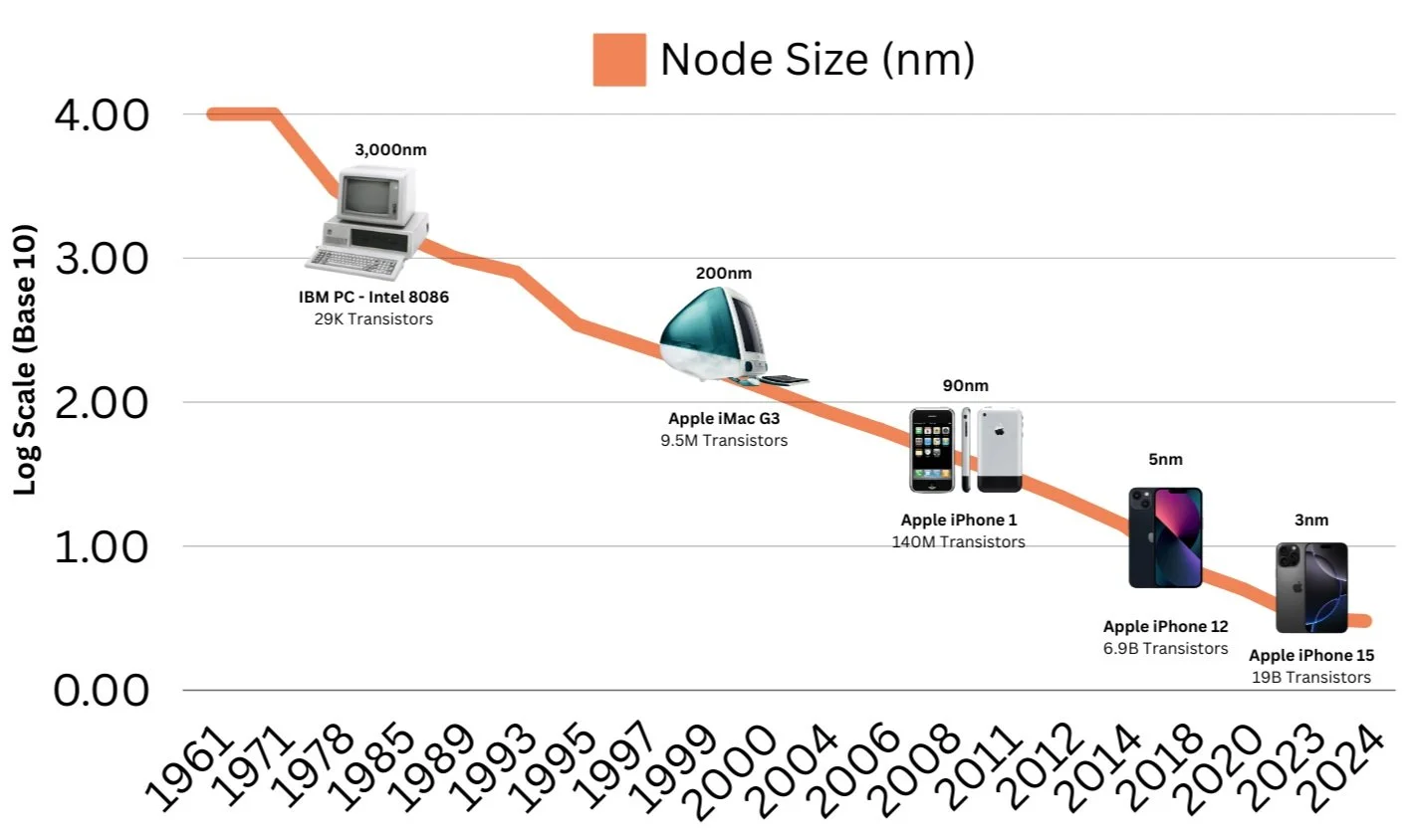

Node Size (nm)

A smaller technology node means making the transistors on a chip. Transistors are tiny switches that control electricity flow, and shrinking their size makes them both faster (because electricity has less distance to travel) and more energy-efficient (because they use less power).

The size of a node is measured in nanometers (nm) — an incredibly tiny unit of length, equal to one-billionth of a meter (0.000000001m). To put it simply, the smaller the nanometer number, the more advanced and powerful the chip is. Looking back to IBM PC of 1981 that used an Intel 8086 chip, the node size was 3,000nm and had 29 thousand, fast forward to a recent iPhone 15 which has a node size of 3nm (1,000X smaller) and over 19 billion transistors.

Leading Node vs Trailing Node

Leading node process technology refers to the most advanced semiconductor manufacturing techniques, using extremely small transistors (such as 5nm and 3nm) to produce faster, more power-efficient chips. These cutting-edge nodes benefit from EUV (Extreme Ultraviolet) lithography, which allows for more precise patterning of extremely small features. This precision is crucial for high-performance devices that require large numbers of densely packed transistors. Devices like Apple’s A17 Bionic chip (used in the iPhone 15) and NVIDIA’s A100 GPUs (used for AI and machine learning applications) are examples of products made using leading nodes. These chips rely on the enhanced performance and efficiency offered by smaller node technologies, which is why they're ideal for smartphones, AI processors, and high-end computers.

On the other hand, trailing node process technologies (such as 22nm or older) often use DUV (Deep Ultraviolet) lithography. While DUV is not as precise as EUV, it is more cost-effective and sufficient for creating chips where extreme performance and transistor density are not critical. These trailing nodes are used in applications like Internet of Things (IoT) devices, automotive electronics, and other consumer products where lower cost is a priority. For example, microcontrollers used in smart home devices and automotive chips for basic vehicle control systems typically rely on trailing node technologies. These chips don’t require the same level of precision and transistor density as the advanced chips in smartphones or AI hardware.

The Supple-Chain

The semiconductor ecosystem is a very complex end-to-end technology value chain that requires tight integration between different semiconductor companies, each company possess a unique set of specialized capabilities and focus on only one part of the value chain. To think about the role of semiconductors and the players in the industry, let´s deconstruct the value chain and work backwards from the end use of the product, for example, a smartphone sold by Apple. A smartphone has many chips inside of it, these chips are designed by fabless companies that only design and sell hardware devices such as Nvidia, AMD, Qualcomm, etc. Once designed, the semiconductor chips are manufactured by a foundry, a semiconductor company with fabrication plants, also known as “fabs”, from companies such as TSMC, Intel or Samsung. The fabrication plants use high-tech machinery, in this case EUV lithography machines sold by ASML, to manufacture semiconductor chips.

Foundries – “fabs”

Also known as fabrication plants, physically build semiconductor chips

“Fabless”

Design and market semiconductor chips but do not own or operate their own fabrication facilities.

Equipment

ASML is the undisputed leader in the semiconductor tool market, particularly in the area of Extreme Ultraviolet (EUV) lithography, holding a total monopoly in EUV with its machines accounting for 100% of the global market share in this advanced technology. EUV is essential for producing the smallest, most advanced chips at nodes such as 5nm, 3nm, and below. In contrast, competitors Nikon and Canon remain focused on Deep Ultraviolet (DUV) lithography, which is still used for less advanced nodes, but they have been unable to develop EUV technology, leaving ASML with a dominant position in the high-end market. While Nikon holds around 20% of the DUV market share and Canon has about 10%, ASML leads with 70% of the DUV market share, further solidifying its position.

ASML relies on a vast network of more than 5,000 suppliers to manufacture its EUV machines, which is made up of seven different modules, each built at one of ASMLs six manufacturing sites among its 60 locations around the world, then shipped to reassembled to Veldhoven for testing. Then it is disassembled again for shipment, using 20 trucks and three fully loaded 747s.

ASML’s EUV machines fall into two main categories:

TWINSCAN NXE Series: These are the current production workhorses, including models like the NXE:3400C and NXE:3600D, which are used for nodes such as 5nm and 3nm.

TWINSCAN EXE Series (High-NA EUV): These next-generation machines, including the EXE:5000 and EXE:5200, will enable chip production below 2nm by offering even greater precision.

The technology behind these machines is incredibly expensive, with TWINSCAN NXE models costing over $200 million each. The High-NA EUV machines (EXE series) are even more costly, with prices estimated at over US $400 million. Due to the high price tag and the complexity of operating these machines, only a few companies can afford them. The largest players in the semiconductor industry, including TSMC, Samsung, Intel, SK Hynix, and Micron, are the primary customers. Together, TSMC, Samsung, and Intel account for over 80% of ASML’s business, underscoring the importance of these companies in driving the demand for advanced chip manufacturing.

Other tool providers like Lam Research and Applied Materials play key roles in semiconductor fabrication, focusing on processes like etching, deposition, and chemical treatments. However, these companies do not compete directly in the EUV space, which remains ASML's domain. Overall, ASML’s control of EUV and its role in advancing chip technology ensure its continued dominance in the semiconductor tooling market.

ASML's rise to dominance in EUV was the result of decades of investment in research and the development of cutting-edge technology. The financial resources required to sustain the development and operation of these tools act as significant barriers to entry. Even companies with substantial financial backing, like Niko and Canon, faced immense challenges and abandoned their EUV lineup years ago, leaving ASML to dominate. While alternative methods for achieving 7nm node sizes without EUV have been announced, they would not be as effective as the EUV-based solutions, reinforcing the necessity of ASML's technology in the production of advanced chips.

EUV Lithography and the Department of Energy - ASML

The development of EUV lithography was shaped by U.S. government funding through the Department of Energy (DOE), which supported key research in the 1990s and 2000s. ASML gained a critical edge by acquiring U.S.-based companies like Chromatica and Advanced Lithography, securing vital patents and technology for EUV. Meanwhile, Nikon and Canon, despite leading in DUV lithography at the time, couldn't access these U.S.-developed technologies due to patent restrictions enforced by the U.S. government, allowing ASML to become the only company with the ability to produce and commercialize EUV lithography machines.

Foundries

TSMC’s dominance in the semiconductor foundry market stems from its technological leadership, focus on advanced manufacturing nodes, and specialization in the foundry business. With over 60% of the global market share and more than 80% of the 5nm and 3nm node market, TSMC has attracted major clients like Apple, AMD, and Nvidia by consistently delivering cutting-edge chip technologies. Unlike Samsung, which is spread across various businesses like memory and displays, or Intel, which has only recently expanded its foundry operations, TSMC’s singular focus on chip manufacturing has allowed it to invest heavily in R&D and production capacity. Its strong customer relationships, ecosystem advantages, and reliable manufacturing capabilities have helped it maintain a dominant position, while competitors struggle to match its pace in advanced nodes.

Samsung, the second-largest foundry with around 11% market share, remains a strong competitor but is more focused on both manufacturing and memory, limiting its foundry capacity. Intel, with 6% of the market, is working to expand its foundry business but lags behind TSMC in technological advancements. Other key players like GlobalFoundries and UMC also compete but do not offer the advanced nodes that TSMC leads in, reinforcing its market dominance.

Design / EDA Tools

Chip designers and Electronic Design Automation (EDA) tool providers serve distinct but interconnected roles in the semiconductor industry. Chip designers like NVIDIA, AMD and Qualcomm focus on creating blueprints and architectures for specialised semiconductor devices such as GPUs, CPUs, and AI processors. They rely on the software and automation provided by EDA tool providers—companies like Synopsys, Cadence, and Siemens (Mentor Graphics)—to design, simulate, verify, and optimize these chips. EDA tools streamline complex tasks such as schematic capture, physical layout, timing analysis, and power optimization, enabling designers to meet power, performance, and area (PPA) requirements. Once designs are finalized, chip designers send them to foundries like TSMC for fabrication. EDA providers thus play an enabling role, offering essential technology that powers the innovative designs developed by chip companies.

Outlook

The semiconductor manufacturing equipment market, which includes tools essential for chip production, is valued at approximately $110.5 billion in 2024, with projections to reach $175.2 billion by 2030, growing at a compound annual growth rate (CAGR) of 7.9% (Grand View Research, Fortune Business Insights). Similarly, the semiconductor foundry market is expected to continue expanding, with a forecasted size of $110 billion by 2025, driven by the demand for advanced node manufacturing in sectors like telecommunications, consumer electronics, and automotive, with a CAGR of 8.5% from 2024 to 2030 (IC Insights, Fortune Business Insights). The electronic design automation (EDA) tools market, which supports chip design, is valued at $13 billion in 2024 and is expected to grow at a strong 9.6% CAGR through 2030, driven by the increasing complexity of designs needed for cutting-edge technologies such as AI and advanced computing (Markets and Markets, Grand View Research).

Growth Drivers

The semiconductor industry is set to benefit from several long-term secular tailwinds, including advances in key technologies like AI, 5G, edge computing, and automotive developments. Over the next decade, the semiconductor market is expected to double from about $450 billion in 2019 to $1-1.3 trillion by 2030, with a compound annual growth rate (CAGR) of 6-8% (Allied Market Research & McKinsey & Company).

Key growth drivers include automotive electrification, where electric vehicles (EVs) are expected to comprise 70% of car sales by 2030, significantly increasing semiconductor content in vehicles (McKinsey & Company). The shift to autonomous vehicles (AVs) will require advanced chips for high-level computation (Allied Market Research).

Artificial Intelligence (AI) and machine learning (ML) are another critical catalyst, with the AI chip market projected to grow at a CAGR of 45%, reaching US$91 billion by 2025 and US$130 billion by 2030 (Allied Market Research).

Additionally, 5G technology is driving demand for higher-capacity, more efficient chips to support wireless infrastructure, with expectations for edge computing and hyperconnectivity to grow at similar rates (Allied Market Research & McKinsey & Company).

The shift from leading node to trailing node process technology continues to define semiconductor manufacturing, with leading-edge chips enabling advancements in 5G, IoT, edge AI, and cloud computing (McKinsey & Company). These innovations are essential for high-speed mobile devices, autonomous vehicle systems, and data centers, where reducing power consumption and increasing processing power are critical for future growth (Allied Market Research). The global semiconductor industry has matured from its volatile past, with increased discipline and consolidation leading to a few dominant players who drive innovation across these diverse sectors (Allied Market Research).

Further supporting growth, the push for technological sovereignty and government investments in semiconductor manufacturing capacity are expanding production and reducing supply chain risks. For example, the US has passed the CHIPS Act, with $52 billion in federal support, while South Korea and Japan are investing billions in R&D and facilities to strengthen their semiconductor ecosystems (Allied Market Research & McKinsey & Company).

These developments, paired with the growing demand across various end-markets like datacenters, consumer electronics, and industrial robotics, are expected to propel the industry’s robust growth trajectory well into the next decade (McKinsey & Company).

Artificial Intelligence (AI) & Machine Learnings (ML)

Artificial Intelligence (AI) is when machines are built to think and make decisions like humans. Machine Learning (ML) is a part of AI where machines learn from data and improve on their own without being told exactly what to do.

Artificial Intelligence (AI) and Machine Learning (ML) have evolved from theoretical concepts into transformative technologies that now shape industries globally. While these technologies have existed for decades, it wasn’t until recently that their impact became truly transformative, thanks in part to breakthroughs by tech giants like Google. For many years, Google was at the forefront of AI innovation, from its pioneering research in deep learning to the introduction of game-changing tools like TensorFlow and Google Brain. However, with the rise of companies like OpenAI, the landscape has shifted, and the pace of innovation has accelerated. This paper explores the history, development, and applications of AI and ML, highlighting the shifting dynamics in the industry and examining their potential to revolutionize everything from healthcare to creative arts.

What is Artificial Intelligence (AI)?

AI is a field of computer science focused on creating machines that can perform tasks that typically require human intelligence. These tasks include:

Reasoning: The ability to make decisions based on available data.

Learning: AI systems can improve over time by learning from experience, much like humans do.

Perception: AI can interpret sensory input, like images or sounds, and make sense of it.

Decision-Making: AI can choose actions based on the data it has analyzed, similar to how humans make decisions.

AI also includes subfields like Natural Language Processing (NLP), robotics, and computer vision, which focus on specialized tasks like understanding language, controlling robots, and interpreting images, respectively.

Key Types of AI

Artificial Narrow Intelligence (ANI)

Definition: AI focused on one task, often referred to as "weak AI."

Examples: Virtual assistants (e.g., Siri, Alexa), recommendation systems, and spam filters.

Artificial General Intelligence (AGI)

Definition: Hypothetical AI capable of performing any intellectual task a human can do.

Status: Still a concept, the pursuit of AGI is a long-term goal for AI researchers.

Artificial Superintelligence (ASI)

Definition: AI that surpasses human intelligence in every domain, including creativity and emotional intelligence.

Implications: Raises ethical questions and is often a topic of discussion in both science fiction and philosophical debates.

What is Natural Language Processing (NLP)?

NLP is a specific area within AI that enables machines to understand, interpret, and generate human language. Whether it’s reading a document, translating a text, or holding a conversation, NLP helps AI systems process language in a way that’s similar to how humans communicate. NLP is used in applications like:

Chatbots (like Siri or Alexa)

Language translation services (such as Google Translate)

Text analysis tools (which can read and summarize documents)

What is Machine Learning (ML)?

Machine Learning (ML) is a subset of AI where machines can learn from data. Rather than being explicitly programmed with hard-coded instructions, ML systems analyze patterns in the data and use that information to make predictions or decisions. As they process more data, they continue to improve.

Some examples of ML include:

Fraud detection: Analyzing financial transactions to spot suspicious activity.

Recommendation engines: Suggesting products based on your browsing history (think Amazon or Netflix).

Autonomous driving: Cars that learn to drive by observing road patterns and adapting to different situations.

Key Machine Learning (ML) Techniques

Supervised Learning

Models are trained on labeled data with known outcomes. Examples: image classification, credit scoring, fraud detection.

Unsupervised Learning

Models analyze data without labeled outcomes, identifying patterns. Examples: customer segmentation, market basket analysis.

Reinforcement Learning (RL)

AI learns through rewards and penalties based on interactions with its environment. Examples: game-playing AI (AlphaGo), robotics.

Deep Learning

A subset of ML using deep neural networks to process large datasets. Examples: image recognition, speech recognition, autonomous vehicles.

Generative AI and Large Language Models (LLMs)

Generative AI refers to AI systems that can create new content, like text, images, or music, by learning from existing data. This content can include:

Text generation (such as articles or poems)

Image creation (like DALL-E by OpenAI, which generates pictures from text descriptions)

Protein structure predictions (such as AlphaFold by DeepMind, which predicts how proteins fold)

Large Language Models (LLMs) are a specific type of generative AI that focuses on text. These models are trained using massive datasets to understand and generate human-like text. They use advanced architectures, like transformers, to process and generate text.

Some well-known LLMs include:

GPT (Generative Pre-trained Transformer), created by OpenAI, can write essays, answer questions, and hold conversations.

BERT (Bidirectional Encoder Representations from Transformers), developed by Google, helps understand the meaning of words in context, which is crucial for tasks like search and language translation.

History of AI and ML

The Beginnings (1950s–1970s)

1950: Alan Turing proposed the Turing Test, a method to measure a machine's ability to exhibit intelligent behavior.

1956: The term "Artificial Intelligence" was officially coined during the Dartmouth Conference.

Early AI systems focused on symbolic reasoning, using predefined rules to solve problems.

The AI Winters (1970s–1990s)

Progress slowed due to limited computational power and funding challenges.

AI research shifted towards expert systems during this time, but success was mixed.

Resurgence and Growth (1990s–2010s)

Advancements in computational power, data storage, and the internet fueled the resurgence of AI research.

1997: IBM’s Deep Blue defeated world chess champion Garry Kasparov, marking a major milestone in AI.

2012: The rise of deep learning, particularly through models like AlexNet, revolutionized AI, especially in image recognition.

The Modern Era (2010s–Present)

The 2010s saw AI and ML experience a renaissance, driven by the convergence of big data, powerful computational resources, and advanced algorithms. Leading companies like Google and OpenAI played crucial roles in mainstreaming these technologies.

Google’s Breakthroughs:

Deep Learning & Neural Networks: Google's Google Brain project (founded in 2011) advanced deep learning, enhancing image recognition and speech processing.

2012: Google Brain's team, led by Geoffrey Hinton, made a breakthrough by training deep neural networks to recognize images, dramatically reducing error rates in image classification.

2014: Google acquired DeepMind, which later developed AlphaGo, an AI that defeated the world champion of the game Go in 2016. This achievement highlighted AI’s capacity to solve complex problems that once required human intuition.

2017: Google released TensorFlow, an open-source deep learning library, which democratized AI research and development, enabling easy integration of AI into various products and services.

2018: Google unveiled BERT (Bidirectional Encoder Representations from Transformers), revolutionizing Natural Language Processing (NLP) by improving tasks like translation and sentiment analysis.

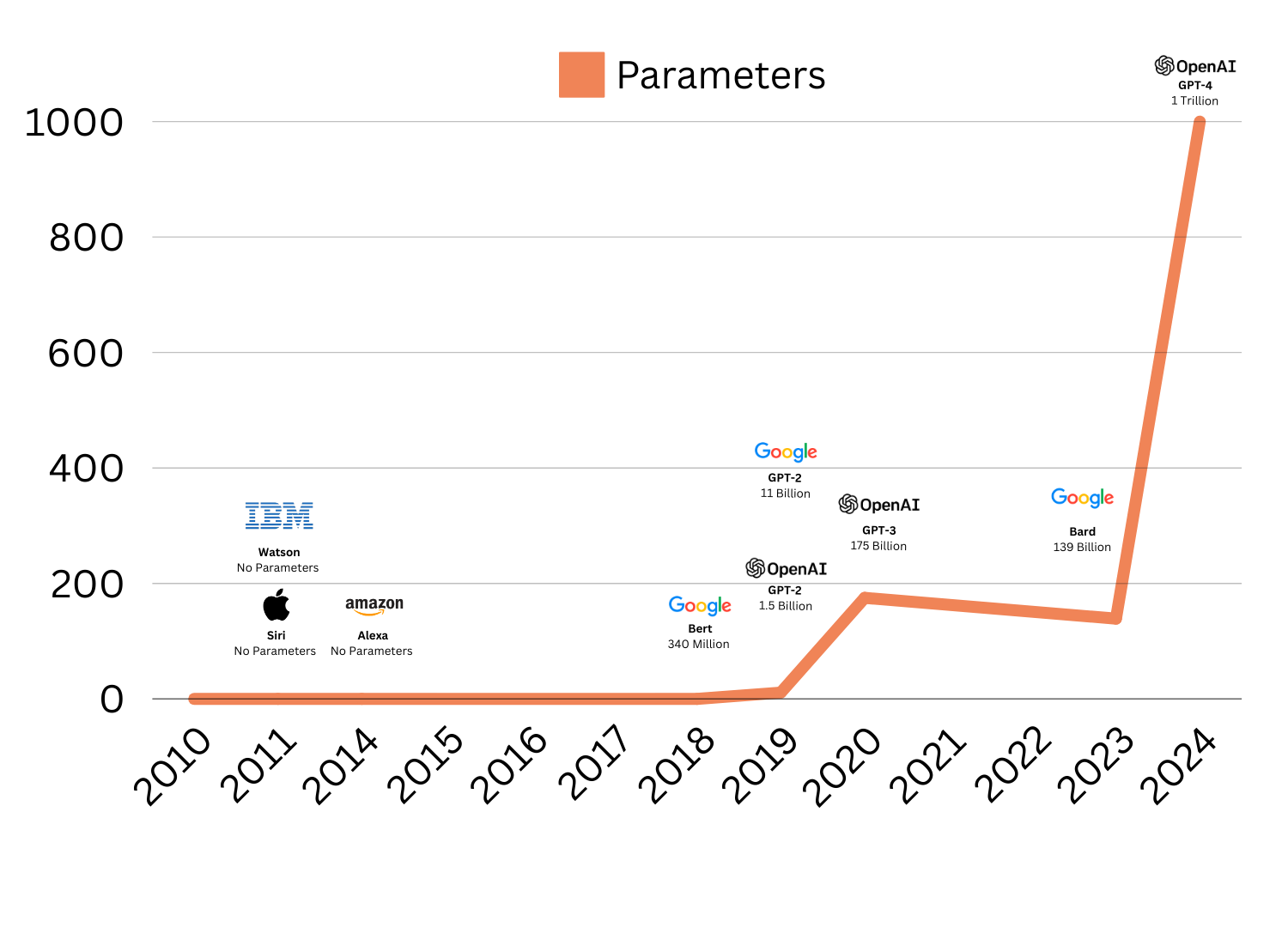

OpenAI’s Emergence:

Founded in 2015 by Elon Musk, Sam Altman, and others, OpenAI set out with the mission to ensure that artificial general intelligence (AGI) benefits humanity.

2018: OpenAI introduced GPT-2, a language model capable of generating human-like text, sparking debates on the ethical implications of generative AI.

2020: OpenAI released GPT-3, a model with 175 billion parameters, pushing the boundaries of NLP with capabilities including writing essays, generating code, and answering complex queries.

2022: ChatGPT (based on GPT-3) was launched, propelling AI adoption in a wide range of sectors by focusing on conversational applications.

2023: OpenAI introduced GPT-4, offering advanced multimodal capabilities and enhanced reasoning abilities.

Google vs OpenAI (Microsoft)

Google and OpenAI are two of the leading companies driving advancements in artificial intelligence, but they differ in their approaches and business models. Google integrates AI into its vast array of products, such as search engines, YouTube, and cloud services, while also conducting cutting-edge research through its subsidiary, DeepMind. Its AI tools are focused on improving user experiences and business optimization. OpenAI, on the other hand, is focused on developing artificial general intelligence (AGI) that is safe, ethical, and beneficial for humanity. Through models like GPT and collaborations with Microsoft, OpenAI aims to provide powerful AI tools for a wide range of industries, including programming, customer service, and content generation. While Google’s AI is more embedded in its ecosystem, OpenAI is more externally focused, offering its AI capabilities through API services and maintaining a strong emphasis on AI governance.

BERT (Bidirectional Encoder Representations from Transformers) is a groundbreaking natural language processing (NLP) model developed by Google in 2018. It was designed to improve the understanding of language context by processing text bidirectionally, meaning it can consider the words before and after a given word in a sentence, rather than just looking at words sequentially from left to right or right to left. This allows BERT to understand the nuances and meanings of sentences much better than previous models, making it highly effective for tasks like search engine optimization, question answering, and language translation. BERT significantly improved Google's search algorithms, enabling the system to provide more relevant results based on natural language queries.

The "T" in GPT (Generative Pre-trained Transformer) stands for "Transformer," a model architecture introduced by Google in 2017 with their paper, "Attention is All You Need." It revolutionized natural language processing (NLP) and machine learning by introducing a new way of handling sequence data (like text) that relies entirely on a mechanism called self-attention, eliminating the need for recurrence or convolution. The Transformer’s ability to understand relationships between words over long distances in a sentence laid the foundation for later models like GPT, which adopted and refined these techniques for generative language tasks.

Google's work with the Transformer architecture directly influenced the development of models like GPT. Although GPT was introduced by OpenAI, it builds upon the Transformer framework pioneered by Google, adapting it for generative tasks (like creating coherent text) and pre-training on large datasets, which allows GPT models to perform a wide variety of tasks without task-specific fine-tuning.

GPT stands for Generative Pre-trained Transformer, which is a type of deep learning model used in natural language processing (NLP). It is designed to understand and generate human-like text based on input data. Here's a breakdown of what each part of the term means:

Generative: Refers to the model's ability to generate text, such as answering questions, completing sentences, or even creating entire paragraphs or articles, based on the input it receives.

Pre-trained: Indicates that the model is trained on a large corpus of text data before being fine-tuned for specific tasks. The pre-training helps the model learn patterns, grammar, context, and nuances of human language.

Transformer: This refers to the underlying architecture of the model. The Transformer architecture, introduced in a 2017 paper titled Attention is All You Need, is highly effective in handling sequential data and is the foundation for many state-of-the-art NLP models like GPT. It uses self-attention mechanisms to understand the relationships between words in a sentence, regardless of their position.

AI Models are rapidly scaling parameters

Parameters

In simple terms, parameters in Large Language Models (LLMs) are like the "settings" or "rules" the model learns while being trained. These settings help the model understand and generate human-like text. The more parameters a model has, the more complex and accurate it becomes. Early AI models had fewer parameters, but as technology advanced, models like GPT-3 and GPT-4 grew to billions of parameters, making them much more powerful. The increase in parameters allows these models to better understand context, generate smoother responses, and perform tasks like translation or summarization with greater accuracy.

AI Market: TAM, CAGR, and Growth Catalysts

Total Addressable Market (TAM):The global Artificial Intelligence (AI) market size was valued at approximately $200 billion in 2023 and is projected to reach $1.87 trillion by 2030, growing at a CAGR of 37.3% from 2023 to 2030 (Grand View Research), driven by widespread adoption across industries such as healthcare, finance, automotive, and retail. Generative AI, a sub-segment, is expected to grow from $13.71 billion in 2023 to over $109 billion by 2030, growing at a CAGR of 35.6% (IDC Blog) during the same period, reflecting its fast-evolving applications in language models, computer vision, and multi-modal systems

Key Growth Catalysts:

Industry-Specific Applications

Healthcare: AI for Diagnostics and Robotic Surgery

AI has made significant inroads into healthcare, improving diagnostics, patient care, and operational efficiency. Machine learning models are now used to assist in the early detection of diseases such as cancer, heart conditions, and neurological disorders by analyzing medical images with a level of precision that rivals or surpasses human experts. For example, AI-driven tools like IBM Watson Health have shown remarkable results in interpreting radiology images. Furthermore, AI algorithms are advancing in drug discovery, streamlining the process of identifying promising compounds and predicting their effectiveness.

In robotic surgery, AI-powered systems like those developed by Intuitive Surgical (maker of the da Vinci surgical system) are enabling minimally invasive procedures with greater precision and reduced recovery times. Robotic surgery platforms integrate machine learning to improve the accuracy of movements and provide real-time data analytics to enhance decision-making during procedures. These innovations are expected to significantly reduce healthcare costs and improve patient outcomes.

Finance: AI for Risk Management, Compliance, and Investment Analytics

AI is transforming finance by enabling more accurate predictions, improving operational efficiency, and ensuring better risk management. Machine learning algorithms are increasingly used to detect fraudulent transactions, optimize credit scoring, and manage market risk by analyzing vast amounts of data for patterns that would be too complex for traditional models.

For compliance, AI tools help financial institutions adhere to regulations by automating processes such as transaction monitoring and reporting suspicious activities. These systems can quickly identify discrepancies or unusual patterns that may indicate fraud or financial crimes, thereby reducing human error and improving security.

In investment analytics, AI is playing a crucial role in algorithmic trading and portfolio management. Machine learning models analyze market data, news sentiment, and financial reports to provide real-time trading recommendations and predictive insights. This has the potential to not only enhance profitability but also enable greater financial inclusivity by democratizing access to sophisticated investment tools.

Generative AI Expansion

Increased Demand for Large Language Models (LLMs)

Generative AI, particularly Large Language Models (LLMs) like GPT-4, has seen an explosion in demand across a wide range of industries. These models, which can generate human-like text, are now integral to customer service, content creation, and translation services. In content creation, LLMs are being used to draft articles, produce marketing copy, and generate product descriptions at scale. Their ability to create coherent, contextually aware, and creative text has led to their rapid adoption by companies looking to automate content generation, thus reducing costs and time-to-market.

In customer service, AI chatbots powered by LLMs are improving user experiences by providing 24/7 support that can handle complex inquiries, reducing wait times and freeing up human agents to deal with more nuanced issues. The ability of LLMs to understand and respond in natural language also allows for more personalized interactions with customers, leading to higher satisfaction and engagement.

In translation, LLMs like Google's BERT and OpenAI’s GPT models have revolutionized the way businesses approach global communication, enabling more accurate, nuanced translations in real time, without the need for extensive human oversight. This is particularly crucial as businesses expand into international markets and require scalable, effective language solutions.

Cloud Computing and AI-as-a-Service (AIaaS)

The integration of AI capabilities into cloud computing platforms has been a key factor in driving the AI market forward. Cloud service providers such as Amazon Web Services (AWS), Microsoft Azure, and Google Cloud have heavily invested in AI, offering machine learning services to businesses of all sizes. By utilizing cloud-based AI solutions, companies can access cutting-edge tools without the need for extensive in-house infrastructure or expertise, thereby lowering costs and accelerating AI adoption.

AI-as-a-Service (AIaaS) is democratizing access to advanced AI, providing smaller firms with tools that were once available only to large corporations. AIaaS allows businesses to integrate machine learning models for a variety of applications, such as predictive analytics, natural language processing, and image recognition, without needing deep technical know-how or the resources to build their own models. This cloud-based flexibility is expected to drive significant growth in sectors like retail, healthcare, and finance, where AI can offer a competitive edge.

Government Initiatives and Regulations

Government support for AI research and development is accelerating its deployment in various sectors. Countries like the United States, China, and the European Union have recognized the transformative potential of AI and are backing initiatives to lead in AI development. In the U.S., the National Artificial Intelligence Initiative Act (2020) aims to coordinate federal AI research efforts and maintain leadership in the field. Similarly, the European Union is focusing on AI ethics and responsible innovation, with frameworks like the Artificial Intelligence Act, which seeks to ensure the development and use of AI systems are ethical, transparent, and in line with human rights.

These regulatory frameworks are creating a balanced environment where AI can be harnessed for growth while mitigating risks such as data privacy concerns, bias, and misuse. Governments also fund AI-driven innovations in healthcare, manufacturing, and cybersecurity, providing both the incentive and resources for continued AI adoption.

Consumer Trends

The rise of AI has profoundly impacted consumer experiences across various industries, particularly in retail, advertising, and entertainment. AI technologies enable hyper-personalization, tailoring products and services to individual preferences and behaviors, thus improving customer satisfaction and driving sales.

In retail, AI is used to analyze consumer purchasing behavior, predict trends, and personalize shopping experiences through recommendation engines. Retail giants like Amazon use AI to suggest products based on customer history and preferences, creating a more customized shopping experience that increases conversion rates and customer loyalty.

In advertising, AI has transformed how businesses engage with potential customers. Machine learning models are used to optimize ad targeting, predicting consumer preferences based on browsing history, demographics, and even social media activity. This precision in targeting not only improves advertising ROI but also creates less intrusive, more relevant ads for consumers.

In entertainment, AI is changing how content is delivered and consumed. Streaming services like Netflix and Spotify use AI-driven recommendation systems to suggest content that aligns with viewers' tastes, enhancing user engagement and retention. Additionally, AI is increasingly used in content creation itself, from generating scripts to producing music, further expanding its influence on the entertainment industry.

Cloud Computing

Cloud computing means using the internet to access things like storage, software, and servers, so you don’t need to own or manage the equipment yourself.

History and Evolution

Cloud computing evolved from earlier innovations, starting with mainframe time-sharing systems in the 1960s. These large-scale computers were capable of handling complex tasks and supporting many simultaneous users, primarily in universities and government institutions. In the 1990s, grid computing emerged as a precursor to cloud computing. This model connected multiple, often geographically dispersed, computers to work together, sharing resources. These pioneering systems made it possible for users to access and share computational power, setting the stage for the cloud computing revolution.

A key milestone in the evolution of cloud computing was the advent of virtualization, which enabled multiple virtual machines (VMs) to run on a single physical server. This innovation significantly improved resource management and operational efficiency. The real turning point, however, came in 2006 with the launch of Amazon Web Services (AWS). AWS introduced a pay-as-you-go model for storage and computing, shifting the paradigm from owning and maintaining physical servers to renting scalable, on-demand resources. AWS’s flagship offerings, S3 (Simple Storage Service) and EC2 (Elastic Compute Cloud), highlighted the cloud's vast potential, making it accessible to businesses of all sizes.

A defining moment for AWS came in 2013 when it secured a US$600 million cloud contract with the CIA. This deal involved creating a private cloud version of AWS's public infrastructure tailored to the CIA’s stringent security needs. AWS’s ability to offer unparalleled scalability and technical sophistication led to its selection over competitors like IBM, underscoring its dominance in sectors requiring high security and advanced technology, particularly in government and intelligence.

The cloud computing landscape became even more competitive with the entrance of Microsoft and Google. Microsoft Azure, launched in 2010, leveraged the company’s deep integration with enterprise software. Azure's hybrid cloud solutions were particularly appealing to businesses looking to integrate on-premise systems with cloud services. Azure’s ability to seamlessly bridge these two worlds made it an attractive choice for large enterprises with existing infrastructure, facilitating their transition to the cloud.

Google Cloud Platform (GCP), introduced in 2008, differentiated itself by emphasizing data analytics, artificial intelligence (AI), and machine learning. By positioning itself as a leader in these rapidly growing fields, GCP targeted industries that demanded advanced data processing capabilities. Google’s expertise in AI and its powerful tools for big data analytics quickly established it as a key player in the cloud ecosystem, especially for businesses focused on innovation in data-driven technologies.

Cloud Computing History Timeline

(Source: Gartner, IDC, Synergy Research Group, and Statista)

1960s – The Concept of Cloud Computing

1961: John McCarthy, a computer scientist, envisions the idea of utility computing, where computing power could be sold as a utility, like electricity.

1969: The first significant step toward cloud computing, ARPANET, is developed, laying the foundation for the internet.

1960s – The Concept of Cloud Computing

1990s – Early Forms of Cloud Computing

1994: Salesforce.com is founded, marking one of the first software-as-a-service (SaaS) companies.

1997: The term "cloud computing" is used by Silicon Valley to describe the move to centralize data and computing resources.

1999: Amazon introduces its e-commerce platform, but also begins developing infrastructure that will later evolve into AWS.

2000s – The Rise of Cloud Services

2002: Amazon Web Services (AWS) launches with its Simple Storage Service (S3), allowing users to store and retrieve any amount of data.

2006: AWS expands its offerings to include Elastic Compute Cloud (EC2), revolutionizing the way businesses use computing resources by introducing a pay-as-you-go model.

2008: Google Cloud Platform (GCP) is launched with a focus on data analytics and machine learning.

2010: Microsoft Azure is launched, offering a hybrid cloud platform that integrates on-premise and cloud-based resources.

2010s – Expansion and Competition

2010: Microsoft launches Azure, its cloud platform offering services such as virtual machines and storage.

2012: AWS introduces Elastic Beanstalk, a platform-as-a-service (PaaS) solution to help developers deploy and manage applications.

2013: AWS secures a $600 million contract with the CIA to create a private cloud for government use, cementing its position as a leader.

2014: Google introduces Google Cloud Platform with a focus on AI and machine learning.

2015: Amazon Lambda launches, offering serverless computing, enabling developers to run code without provisioning or managing servers.

2017: IBM expands its cloud services, acquiring Red Hat to strengthen its hybrid cloud capabilities.

2020s – Dominance and Innovation

2020: Microsoft Azure and AWS continue to dominate the cloud market with a significant market share.

2020: Google Cloud expands with new enterprise partnerships and innovations, emphasizing AI and cloud-native applications.

2021: AWS continues to lead the cloud infrastructure market, while Microsoft Azure and Google Cloud innovate with AI, edge computing, and hybrid cloud models.

2022: OpenAI released ChatGPT.

2023: Companies increasingly adopt multi-cloud strategies, leveraging services from AWS, Microsoft Azure, Google Cloud, and other providers.

Cloud Business Models

Cloud computing can be categorized based on the services offered and the level of control granted to the user. These models define how cloud services are delivered and consumed:

Infrastructure-as-a-Service (IaaS):

Definition: Provides users with virtualized computing resources such as storage, compute power, and networking. Users can install and manage their own software applications and operating systems on top of this infrastructure.

Example: Amazon Web Services (AWS), Microsoft Azure, and Google Cloud Platform (GCP) all offer IaaS services where users can rent virtual machines (VMs) and storage, as well as deploy their applications.

Platform-as-a-Service (PaaS):

Definition: Offers a higher level of abstraction, providing a platform allowing customers to develop, run, and manage applications without worrying about the underlying infrastructure. PaaS typically includes operating systems, middleware, and development tools.

Example: Microsoft Azure App Services, Google App Engine, and AWS Elastic Beanstalk.

Software-as-a-Service (SaaS):

Definition: Provides users with access to ready-to-use software applications hosted and maintained by a third-party provider. These applications are typically accessed through a web browser, and users do not need to manage infrastructure or platform aspects.

Example: Google Workspace (formerly G Suite), Microsoft Office 365, Salesforce.

Function-as-a-Service (FaaS):

Definition: A type of serverless computing where users write and deploy functions (code snippets) that execute in response to events without the need to manage servers. The provider automatically handles the infrastructure scaling based on demand.

Example: AWS Lambda, Google Cloud Functions, Azure Functions.

Cloud Deployment Models

Cloud services are deployed in different ways, based on the needs for control, security, and management:

Public Cloud:

Definition: Cloud services are provided over the internet by third-party vendors and are available to anyone who wants to use them. The cloud infrastructure is shared among multiple tenants (users).

Example: AWS, Microsoft Azure, and Google Cloud.

Private Cloud:

Definition: Cloud infrastructure is dedicated to a single organization. It can either be hosted on-site or by a third-party provider, offering more control and security.

Example: A company running its own data centers or using services like VMware to create private cloud environments.

Hybrid Cloud:

Definition: A combination of both public and private clouds, allowing data and applications to be shared between them. This model provides greater flexibility and optimization of existing infrastructure.

Example: A business might store sensitive data in a private cloud while using a public cloud for less sensitive workloads.

Multi-Cloud:

Definition: The use of multiple cloud computing services from different providers to avoid vendor lock-in and increase redundancy.

Example: A company using AWS for storage, Azure for computing, and Google Cloud for machine learning.

Key Players

The cloud computing market is dominated by a few hyperscale providers, often referred to as the "Big 3":

Amazon Web Services (AWS): The market leader, AWS is renowned for its scalability, comprehensive range of services, and global reach. Innovations such as Lambda (serverless computing) and SageMaker (machine learning platform) have solidified its position at the forefront of cloud technology.

Microsoft Azure: Popular among enterprise IT, Azure integrates seamlessly with Microsoft products like Office 365 and Dynamics. Its hybrid cloud capabilities, exemplified by Azure Arc, enable businesses to integrate on-premises systems with cloud environments, offering flexibility and compatibility for enterprises.

Google Cloud Platform (GCP): Specializing in big data analytics, artificial intelligence (AI), and machine learning (ML), GCP stands out for its leadership in AI-driven innovations, especially with TensorFlow. It has built strong partnerships in industries such as healthcare, focusing on advanced cloud-based solutions for data-heavy applications.

These hyperscalers—AWS, Azure, and GCP—are the giants of the cloud space, providing massive infrastructure and platforms that power a wide array of services globally. Other cloud providers, such as IBM Cloud (focused on AI and enterprise solutions), Oracle Cloud (specializing in databases and ERP solutions), and Alibaba Cloud (leading in Asia), offer specialized offerings but do not match the scale of the Big 3. Smaller players like DigitalOcean (developer-focused) and Cloudflare (edge computing) cater to niche markets but still contribute to the broader cloud ecosystem.

Total Addressable Market - TAM

The global cloud computing market is projected to grow from $752.44 billion in 2024 to approximately $2.390 trillion by 2030, with a compound annual growth rate (CAGR) of 21.2% (Grand View Research). This growth is driven by digital transformation, increasing SaaS adoption, and the rise of AI-driven workloads in cloud environments. Sectors such as BFSI, retail, and healthcare lead in cloud adoption, emphasizing scalability and innovation

Next-Stage of Growth

Secular Tailwinds Driving Long-Term Demand for Cloud Computing

The cloud computing landscape is undergoing a transformative shift, with emerging technologies, evolving business needs, and the digital transformation of industries all contributing to a future of robust and sustained demand. Beyond traditional cloud computing, factors such as the rise of hybrid and multi-cloud environments, the integration of AI and edge computing, the growth of serverless architectures, and the rapid expansion of digital infrastructure in emerging markets are all key drivers. These trends, in combination with broader forces like the expansion of software applications, data collection, and increasing autonomy, are creating a perfect storm of long-term secular tailwinds for cloud computing. Here's how each of these elements plays a vital role:

AI and Edge Computing: Enabling Real-Time Analytics and Automation

Artificial intelligence (AI) and edge computing are reshaping the cloud computing landscape. AI’s integration into cloud platforms is enhancing automation, streamlining processes, and improving decision-making. Concurrently, edge computing is cutting latency by processing data closer to the source, enabling real-time analytics for mission-critical applications. According to PwC, AI could contribute up to $15.7 trillion to the global economy by 2030, with cloud-based AI services being a major driver ("AI Predictions," PwC, 2021). Edge computing, projected to grow at a 37% CAGR from 2023 to 2030, is expected to reach a global market size of $72.6 billion by 2030 ("Edge Computing Market," MarketsandMarkets, 2023). This combination of AI and edge computing is transforming industries by supporting real-time decision-making and enabling advanced applications like autonomous vehicles and smart cities.

AI-Powered Automation: AI optimizes cloud resource allocation, scaling services based on demand, leading to improved efficiency and cost reduction.

Edge Computing for Real-Time Analytics: With the rise of IoT devices, edge computing reduces reliance on central cloud servers by processing data at the source, enhancing response times and decision-making.

Serverless Architectures: Simplifying Scalability and Reducing Operational Complexity

Serverless computing is gaining popularity due to its ability to streamline development and reduce infrastructure management. Serverless models allow developers to focus on application logic rather than server maintenance, providing a cost-effective and scalable solution. Forrester forecasts that the serverless computing market will grow from $7.3 billion in 2022 to $33.4 billion by 2027, at a 35% CAGR ("The Serverless Future," Forrester, 2022). This scalability is crucial for businesses with fluctuating workloads, offering efficient cost management and handling traffic spikes without over-provisioning infrastructure.

Cost-Efficiency and Scaling: Serverless architectures automatically scale based on demand, offering significant savings by charging only for resources used during specific functions, making it ideal for dynamic workloads.

Emerging Markets: A Growth Catalyst for Cloud Adoption

Cloud adoption is surging in emerging markets, especially in the Asia-Pacific region. As internet penetration rises and governments drive digital transformation, businesses in these regions are bypassing traditional IT infrastructure in favor of cloud-based solutions. The cloud services market in Asia-Pacific (excluding Japan) is expected to reach $91.9 billion by 2026, growing at a 17.8% CAGR ("Asia-Pacific Cloud Market," IDC, 2022). Countries like India, Indonesia, and China are leading this growth, spurred by increasing internet access and government-led digital initiatives.

Asia-Pacific’s Rapid Growth: The region is experiencing rapid cloud adoption due to government priorities on digitization and infrastructure development in sectors like healthcare, education, and e-governance.

Expansion of Cloud Services in Emerging Economies: Cloud providers are setting up data centers in these regions to meet growing demand, enhancing service delivery while adhering to local regulations.

Digital Transformation of Industries: Driving Cloud Adoption Across Sectors

The digital transformation of industries, from healthcare and manufacturing to finance and retail, is accelerating the shift to cloud-based solutions. As businesses transition from legacy systems to cloud services, they gain enhanced agility, scalability, and operational efficiency. The global cloud SaaS market is expected to grow from $208.1 billion in 2022 to $385.9 billion by 2028, at a 10.7% CAGR ("Cloud SaaS Market Growth," Statista, 2022). In particular, the healthcare cloud computing market is forecasted to grow from $40.1 billion in 2021 to $118.3 billion by 2028 ("Healthcare Cloud Market," Fortune Business Insights, 2022).

Industry-Specific Solutions: Businesses are adopting tailored cloud platforms, such as CRM systems for sales or collaboration tools for remote teams, to streamline their operations and focus on core functions.

Operational Efficiency: Cloud services enable faster data processing, improved collaboration, and greater access to real-time insights, all of which contribute to driving competitive advantages in fast-moving industries.

Expansion of Software Applications and Data Collection

The demand for cloud services is closely tied to the growing volume of software applications and data generated across industries. Cloud computing is essential for storing and processing vast amounts of data, supporting the booming big data and analytics market, which is expected to grow from $274.3 billion in 2021 to $549.2 billion by 2028, at a 10.7% CAGR ("Big Data Market," Fortune Business Insights, 2022). Additionally, software as a service (SaaS) applications are moving to the cloud, providing businesses with scalable, on-demand solutions for a variety of needs, from payroll to customer relationship management.

SaaS Growth: The rise of cloud-based software applications is pushing up the demand for cloud infrastructure, with SaaS transforming the way businesses access and use software.

Big Data and Analytics: Cloud platforms are essential for handling the exponential growth of data, enabling organizations to perform advanced analytics and gain actionable insights.

Autonomy and the Growth of IoT and Robotics

The expansion of autonomous systems, IoT devices, and robotics is generating new cloud use cases. The IoT market is set to reach $1.6 trillion by 2025, and autonomous vehicles are expected to produce 6 exabytes of data annually by 2035 ("IoT Market Growth," Statista, 2023; "Intel Autonomous Vehicle Data," Intel, 2022). These technologies require distributed computing resources and real-time data processing, creating new demand for cloud platforms capable of supporting them.

Autonomous Systems: Self-driving cars and drones generate massive data streams, which need to be processed in real-time by cloud platforms, driving the need for powerful cloud solutions.

IoT and Connected Devices: With billions of IoT devices in operation, cloud services are essential for managing, storing, and analyzing the data these devices produce, driving innovation across multiple industries.